Data Security Approach

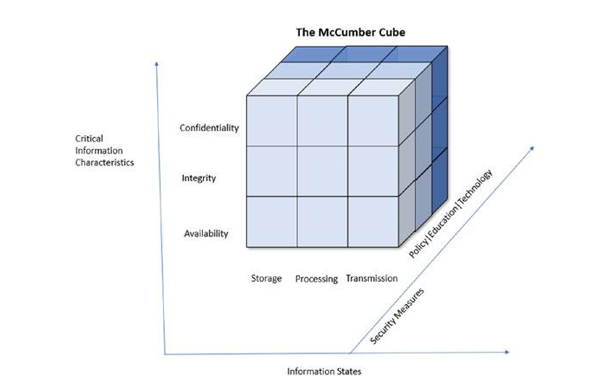

A model was created by John McCumber to provide a representation of the architectural approach used in computer and information security. It is a 3 x 3 x 3 cube with all 27 cells representing an area that must be properly addressed to secure modern information systems.

The McCumber model is used within the combination of structured and unstructured techniques to make a threat elicitation methodology within the model- driven architecture framework: price model, information assurance model, asset protection model, CNSS (Committee on National Security Systems ) model, etc.

Figure 6-9 shows the McCumber Cube, demonstrating the relationship between dimensions (critical information characteristics, information states, and security measures).

Figure 6-9. The McCumber Cube

As per the framework, there are three dimensions, which are security measures, critical information characteristics, and information states. Confidentiality, integrity, and availability are handled at the storage, processing, and transmission states by creating policies, providing education to people, and providing appropriate technologies.

At the cross-section of technology, transmission, and confidentiality lie authorization and authentications. The data is either moving from one end to another or at rest. Hence, data is also safeguarded, whether at rest or in motion.

Data must be encrypted as per data management encryption standards and data classification and protection standards. Cryptographic keys and digital certificates must be created, distributed, maintained, stored, and disposed of securely. This is done by Hardware based encryption (HBA)-based encryption, array-based encryption, and drive encryption.

Data masking, also known as obfuscation, makes sensitive data unidentifiable but available for storage and analytics. SDM (static data masking) and DDM (dynamic data masking) are two ways of masking, each with its own strengths and weaknesses and usage scenarios and fit for use cases:

• SDM (static data masking) masks data at rest by creating a copy of an existing dataset and hiding or eliminating all sensitive data. This copied data is then stored, shared, and used and is free of sensitivity. This is used for use cases in software, application development, and training. However, it has an inability to scale with larger datasets where multiple combinations of access levels are introduced. Also, this is not used for analytical purposes.

• DDM (dynamic data masking) is the most common type of masking and scales to complex use cases. This technique applies masking techniques at query time and does not involve moving data in or out. This approach is applied where identified sensitive data is presented in a restricted manner as businesses don’t require complete access to sensitive data in applications and reports.

Meeting the regulatory compliance rules in non-production environments is also essential. Data loss prevention and Digital rights are also required to be managed and secured.